You’ve got the tools to control how AI runs. But what about why it runs, what it’s allowed to do, and whether it complies with regulatory and organizational requirements?

Cloud platforms like Google Vertex AI now offer powerful platform-level protections, from DLP to Model Armor to agent lifecycle management. These features help secure AI and prevent runtime issues like data leakage or rogue behavior.

But they don’t solve for the bigger picture:

- Is the AI we’re building aligned with our business’s objectives, policies, and customer’s preferences?

- Is our governance flexible enough to manage dynamic and evolving AI systems when they’re being developed and when they’re in production?

- Does our business have the tools to demonstrate accountability and compliance through transparency and auditability?

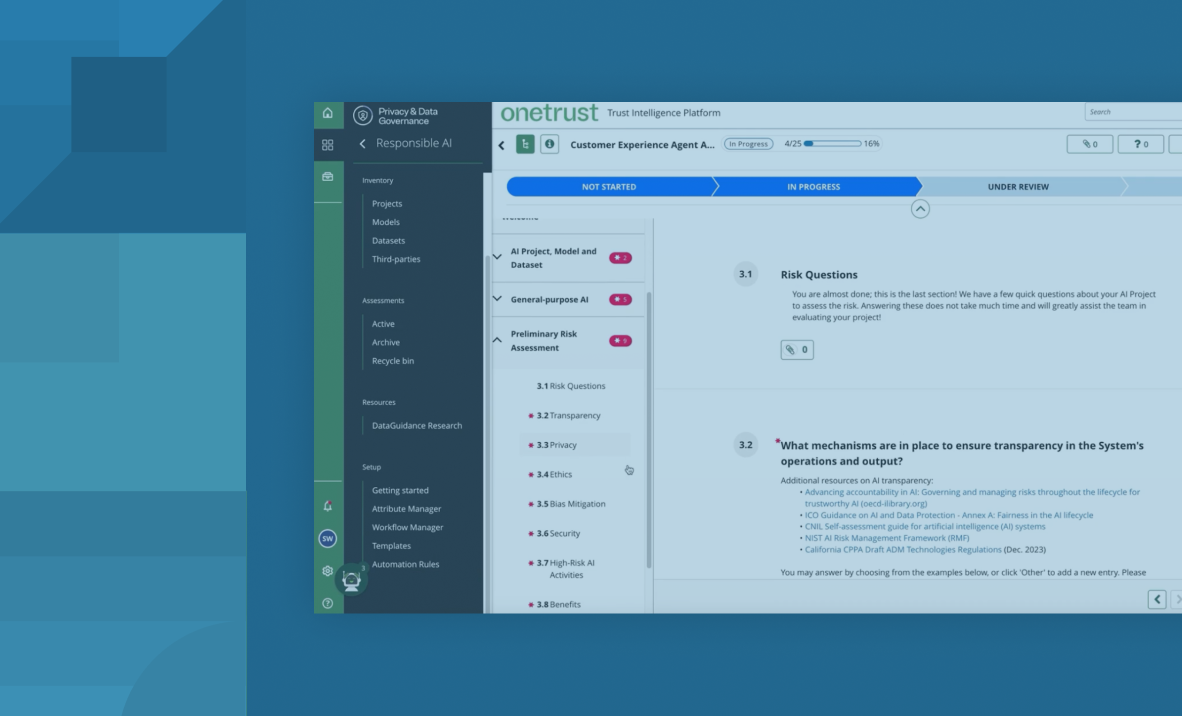

That’s where OneTrust comes in.

The missing layer: Purpose, policy, and regulatory precision

Most AI governance breakdowns don’t happen because infrastructure failed. They happen because governance was never embedded in the AI development process.

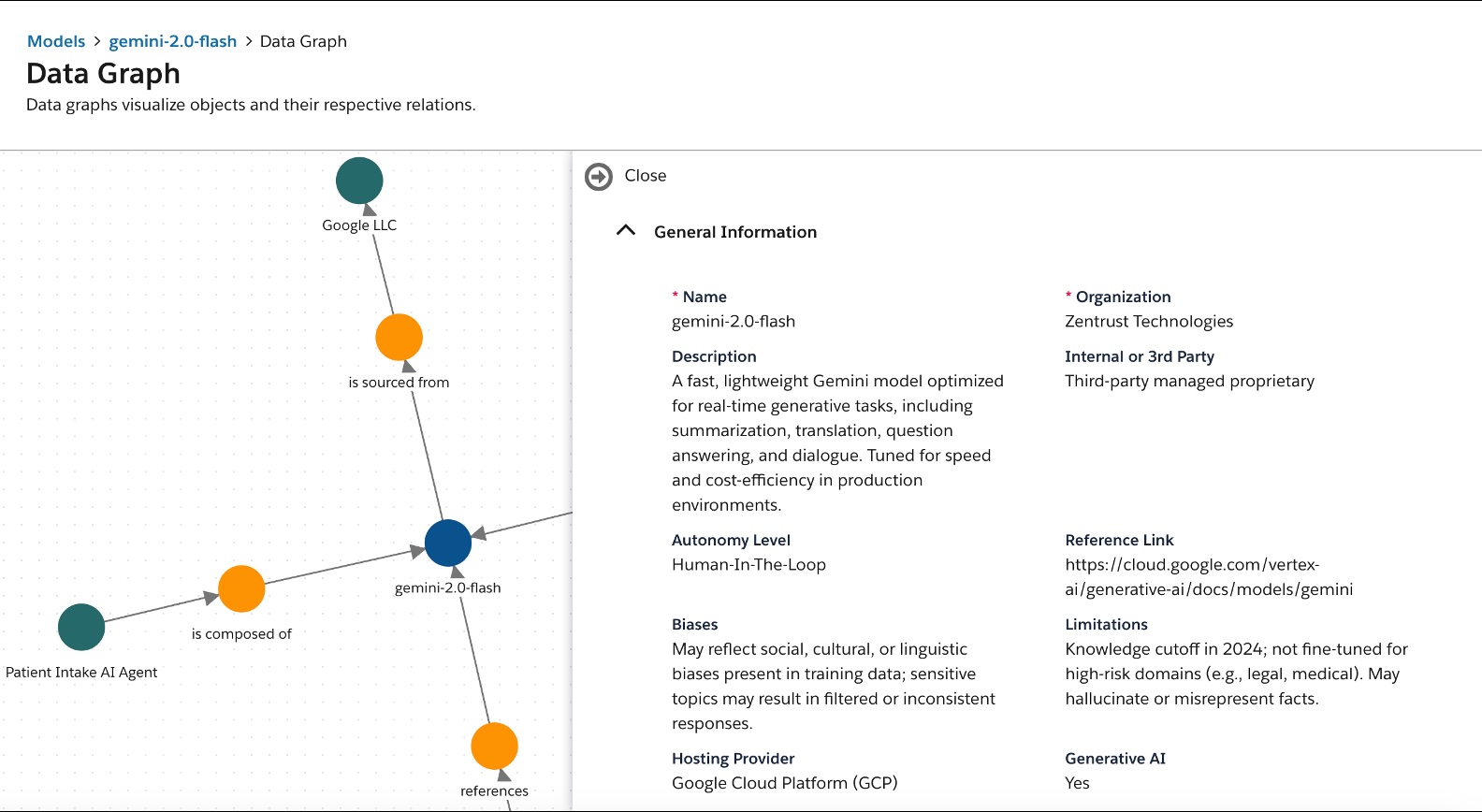

Before a model is deployed, a RAG-based system is queried, or an agent is run for the first time, teams need to know:

- What an AI system is intended to do and whether that purpose is legitimate

- What data it uses and whether it was collected, shared, and consented to appropriately, and if it’s fit for purpose

- What risks it introduces and how those risks map to regulations, industry standards, and internal policies

Today this context is missing from AI pipelines. As a result, teams ship AI systems that pass technical checks but fall far short of regulatory, ethical, and organizational expectations—not to mention the expectations of their customers about the responsible use of their data.

Where governance breaks down

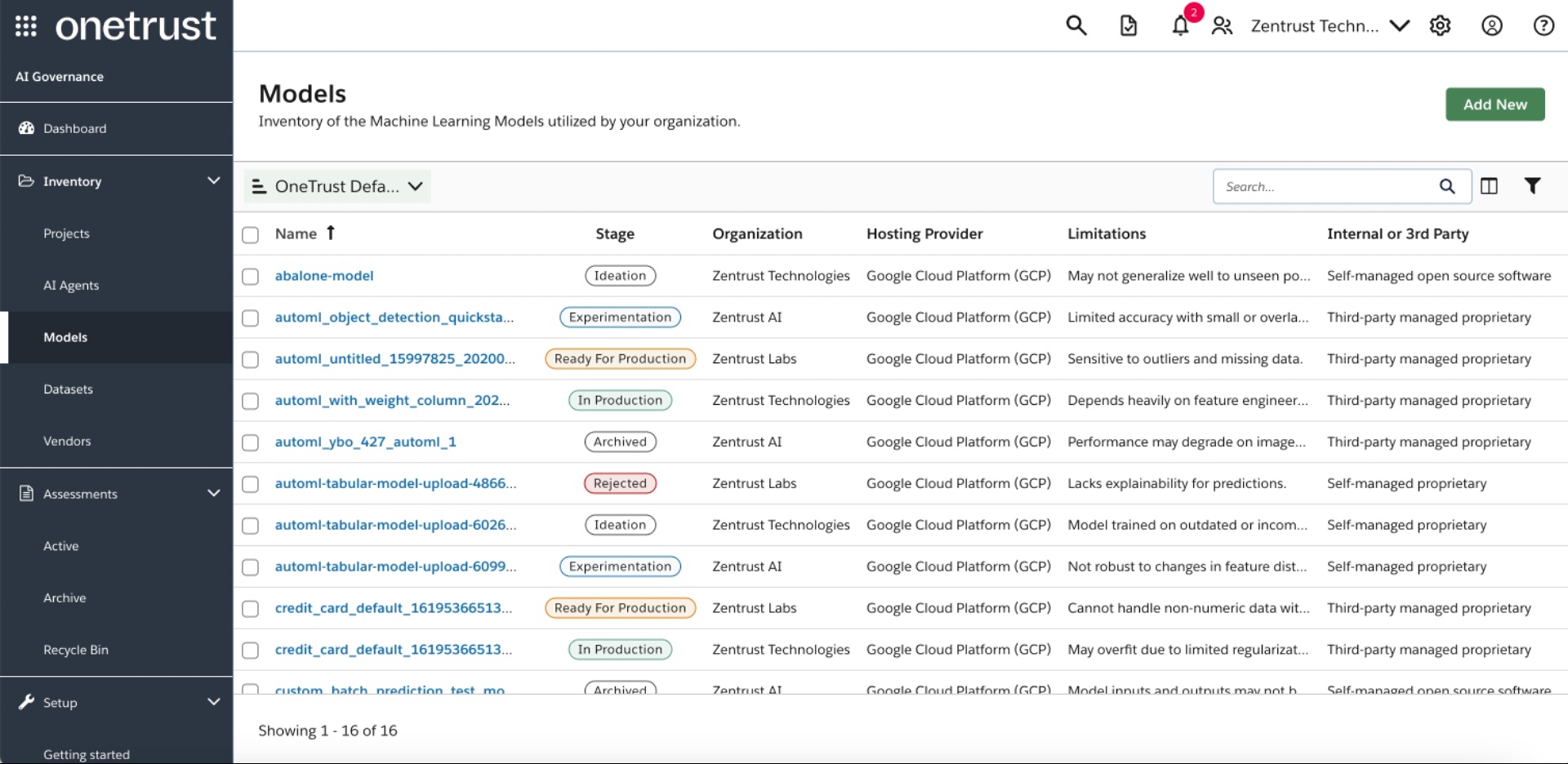

Even teams with mature MLOps, most struggle to:

- Connect models, agents, and other AI systems to clearly documented business purposes

- Tag and track datasets based on consent, regulatory requirements, and intended use

- Integrate risk and impact reviews as part of the development lifecycle

- Demonstrate accountability and decision traceability during audits or internal reviews

These aren’t engineering problems, they’re governance gaps. And without filling them, even well-meaning AI programs can create risk, reduce velocity, or erode stakeholder trust.

OneTrust + Vertex AI: Embedding trust into the AI stack

OneTrust has integrated directly with Google Vertex AI to give teams building on Google Cloud a scalable way to enforce governance from the start of every AI journey. This isn’t just about privacy automation. It’s about full-stack, policy-driven compliance and oversight for AI systems.