Artificial Intelligence (AI) is transforming the business landscape. However, unlike other technological advances like those seen with mobile and data warehouses, AI brings unique differences that make it harder to predict and more challenging to manage the risks. This is where privacy professionals play a critical role in ensuring responsible AI adoption within organizations, alongside peers in security, ethics, and ESG.

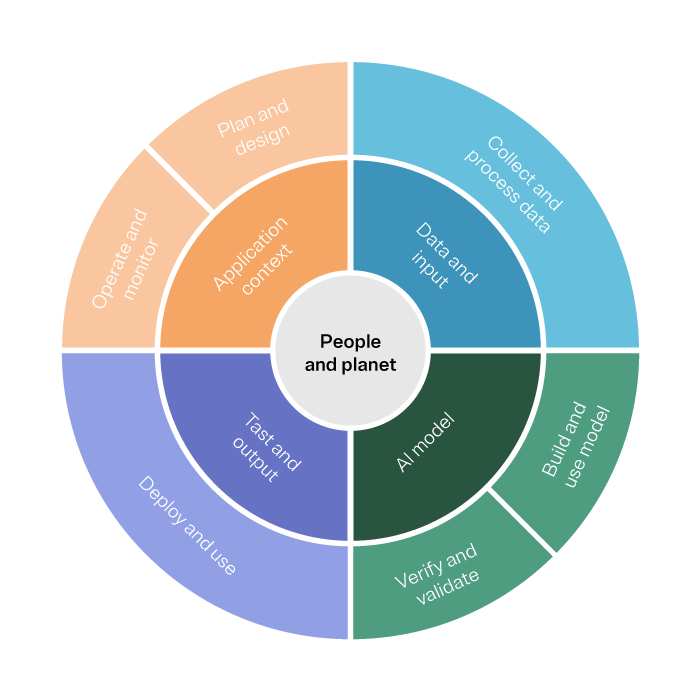

Cross-collaboration is critical for organizations that aspire to build holistic trust programs, and with AI taking up an increasing amount of column space, privacy professionals can help board members, CEOs, and organizational peers have a clear view of how the existing work of Privacy by Design best practices and framework can be a launchpad for Responsible AI governance and a central driver for trust.

In this blog, we explore how you can effectively support your organization in understanding the responsible considerations, risks, and potential benefits of incorporating AI into your business strategy while maintaining a strong focus on data privacy.

Guiding your board and CEO through responsible AI

1. Educate on responsible AI and data privacy

Privacy professionals must help board members and CEOs understand AI literacy. Board members and CEOs won't need an in-depth technical understanding of AI. However, it's beneficial that they understand the ethical implications and data privacy risks associated with its development. To do this:

- Provide plain and clear explanations of AI concepts, technologies, and their potential impact on privacy.

- Share examples of AI successes and failures, highlighting the impact and possible sources of bias.

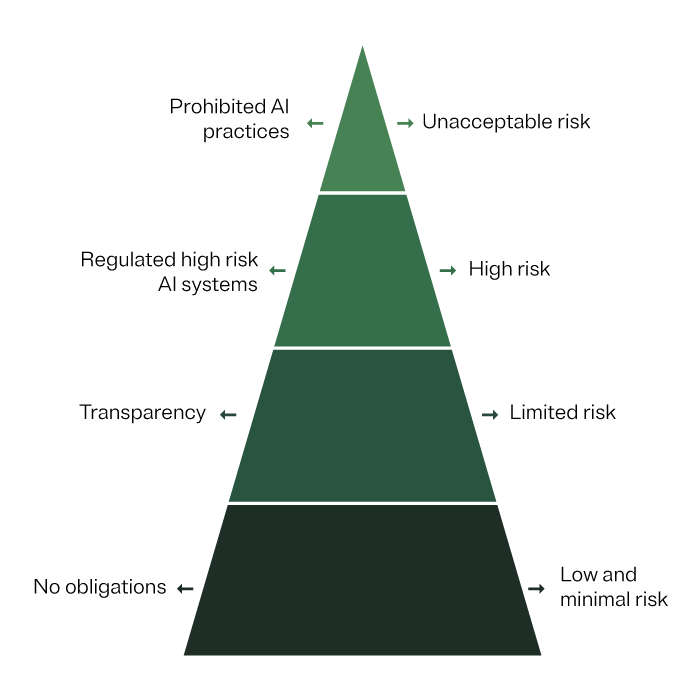

- Understand the unique risks AI introduces and the upcoming regulatory and compliance requirements.

- Show how introducing AI governance for existing systems can lead to responsible AI use.

- Demonstrate the value of collaboration between privacy and data ethics teams.

2. Develop and advocate for a comprehensive AI strategy and responsible AI framework

Privacy professionals should collaborate with board members and CEOs in developing an AI strategy that supports the creation of a strong responsible AI framework, integrating privacy considerations into the organization. To ensure responsible and ethical AI development and deployment, consider the following steps: